Inside Legal AI: What Tasks Teams Trust Machines With and Which Stay Human

Artificial intelligence has officially entered the legal mainstream—but trust hasn’t caught up. A recent survey of 252 legal professionals found that while most teams are experimenting with AI tools, very few are ready to hand over critical legal work entirely to machines. And as OpenAI’s latest move to halt the provision of direct legal advice shows, even the most advanced systems are still learning where the ethical and professional boundaries lie.

For in-house leaders, this shift signals a need for balance, not retreat. Legal departments should continue testing AI for routine, low-risk work while reinforcing the human oversight that protects quality, compliance, and reputation. The goal isn’t to eliminate human input; it’s to deploy technology in ways that make your people more effective.

Key Takeaways

- Two-thirds of legal professionals (67%) have had to override or correct an AI-generated legal output.

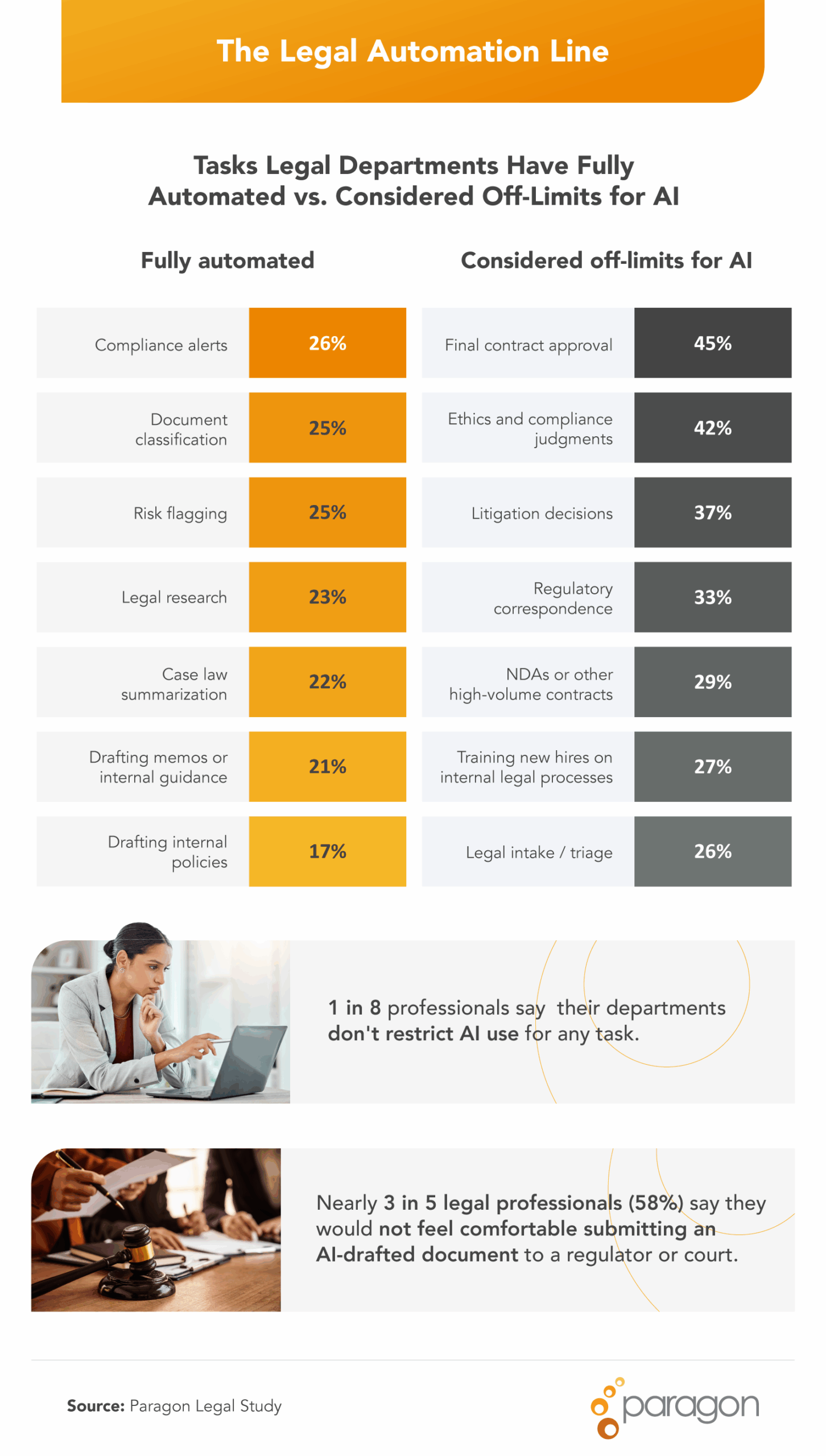

- Nearly 3 in 5 legal professionals (58%) say they would not feel comfortable submitting an AI-drafted document to a regulator or court.

- Nearly half of legal professionals (47%) say AI automation has sparked internal conflict within their legal team.

- Only 1 in 5 legal professionals (21%) place high trust in AI-generated legal work.

- 43% of legal professionals expect increased AI use to result in reduced hiring or staffing needs due to automation.

What’s In, What’s Off-Limits

AI is changing how legal teams operate, but many professionals still draw a firm line between acceptable and risky uses. For most, the technology serves as a tool for speed and efficiency, not judgment or final review.

- Two-thirds of legal professionals (67%) have had to override or correct an AI-generated legal output.

- Nearly 3 in 5 legal professionals (58%) say they would not feel comfortable submitting an AI-drafted document to a regulator or court.

This data shows AI’s current limitations in producing legally reliable work without human input. Teams may use it to draft or summarize, but nearly all rely on attorneys to validate and finalize the output before submission.

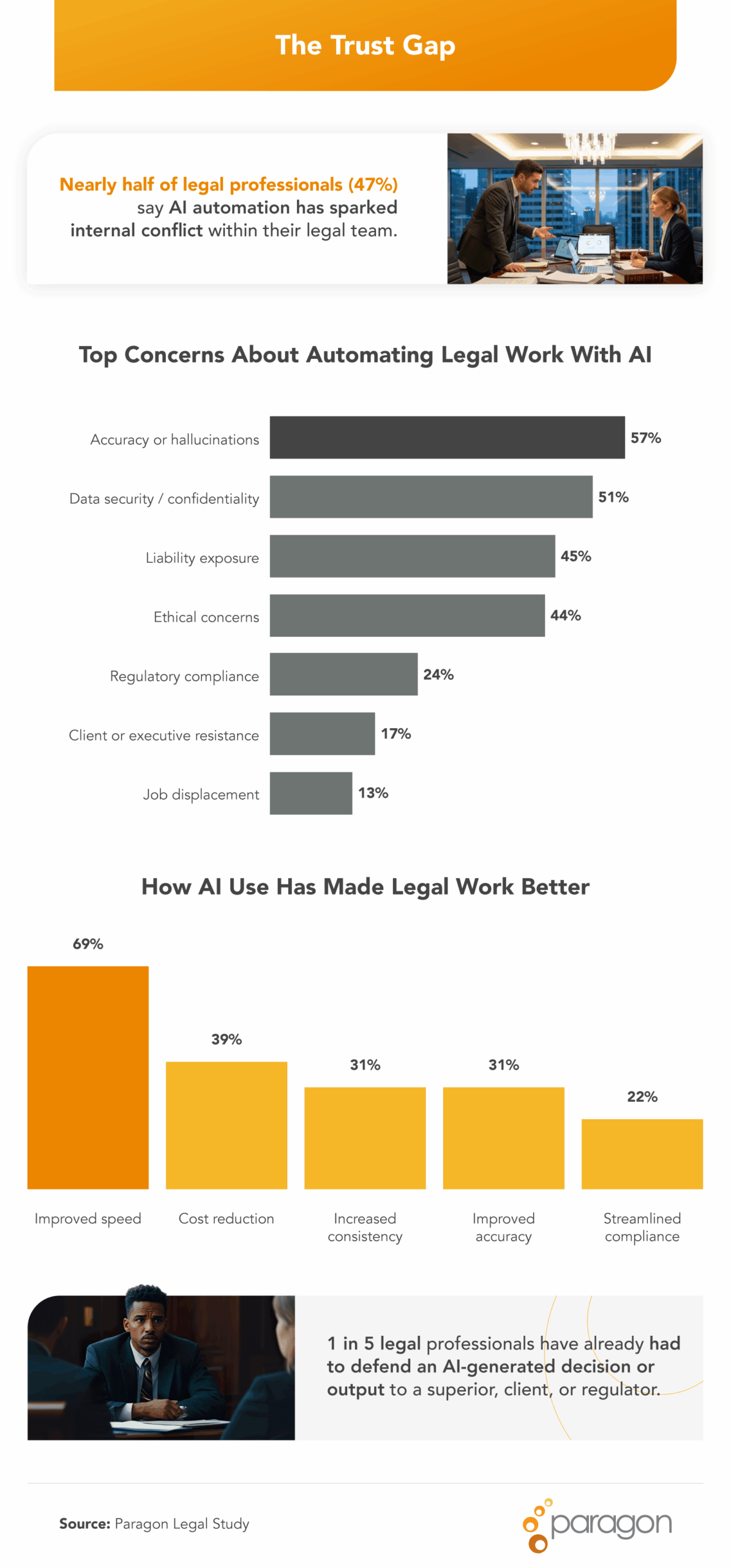

Why Legal Teams Still Hesitate To Rely on AI

Legal departments are cautiously optimistic about AI’s potential, but trust is far from universal. Many professionals report internal tension about when and how to use automation effectively.

- Nearly 1 in 2 legal professionals (47%) say AI automation has sparked internal conflict within their legal team.

- Only 1 in 5 legal professionals (21%) place high trust in AI-generated legal work, while 38% express moderate trust and 42% say they have little to no trust at all.

- Large companies (250–999 employees) are the most likely to place high trust in AI-generated legal work (24%), while small ones (10–49 employees) are the least (18%).

- 60% of legal professionals say they don’t trust AI systems to train new hires using their organization’s internal knowledge base and playbooks.

- Which of the following AI features would most increase your trust?

- Human sign-off required: 41%

- Explainable decision-making: 20%

- Built-in compliance guardrails: 17%

- Audit trails: 6%

- None, I would not trust AI regardless: 15%

- Human sign-off required: 41%

Even as AI systems improve, legal teams remain reluctant to delegate critical or confidential work. Most trust grows only when human review and compliance checks are baked into the process. For now, oversight—not automation alone—remains the key to responsible adoption.

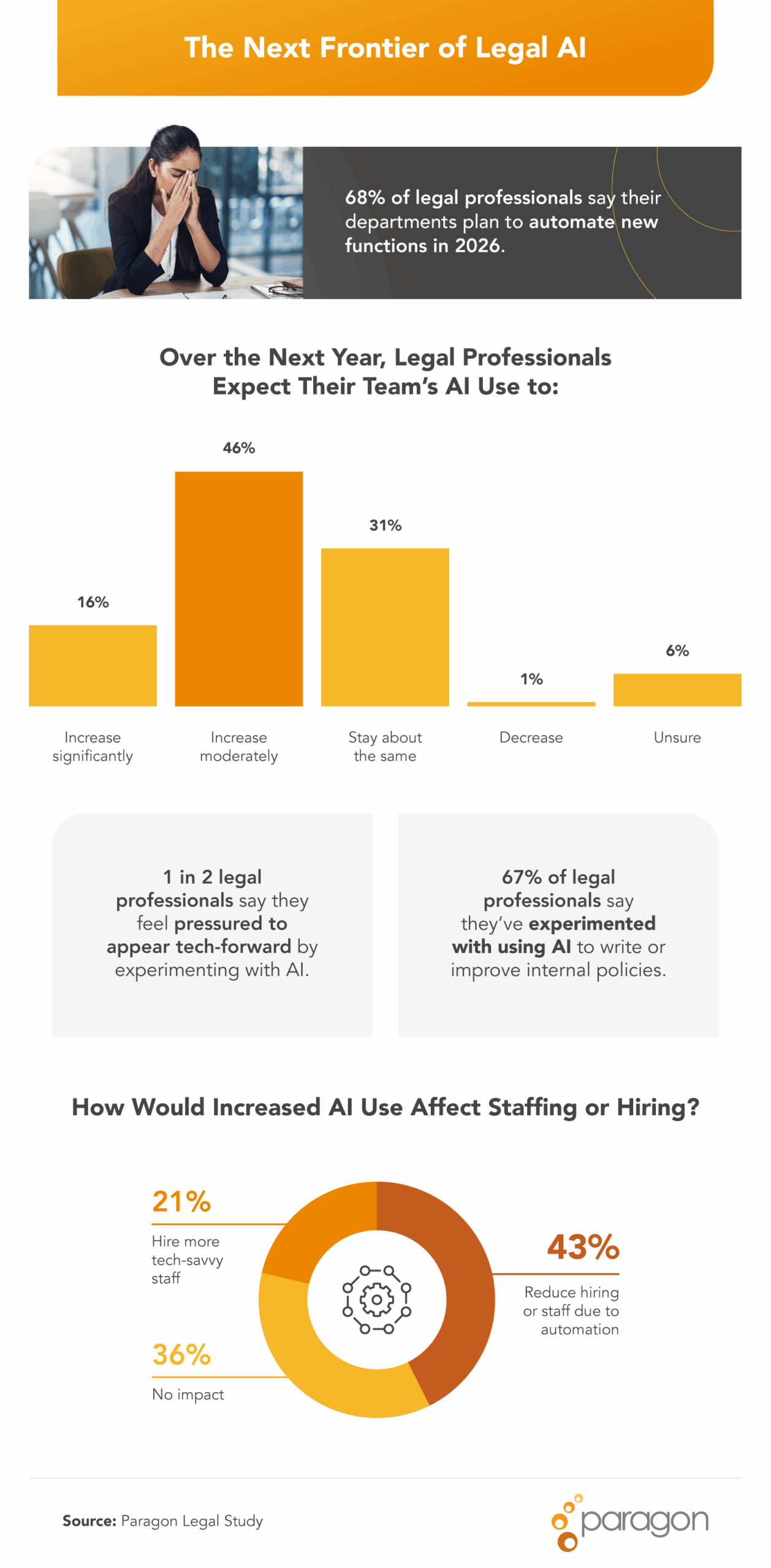

Legal Teams Prepare To Scale AI Use

Despite hesitations, most legal teams expect automation to expand and to impact staffing as a result. Departments are preparing to manage new workflows where AI plays a supporting, not substitutive, role.

- 43% of legal professionals expect increased AI use to result in reduced hiring or staffing needs due to automation.

- Nearly half of micro (1–9 employees) legal departments (47%) and medium-sized (50–249 employees) departments (46%) expect automation to reduce hiring, compared with just 36% of small teams (10–49 employees) and 40% of enterprises (1,000+ employees).

These numbers suggest that while automation may reduce some operational roles, it will also create demand for new skills—particularly for legal professionals who can guide AI strategy, oversee compliance, and translate data insights into business impact. Flexible legal talent solutions, like those offered by Paragon, help departments scale intelligently without compromising expertise or agility.

Methodology

A questionnaire was conducted among 252 legal professionals to explore how in-house legal departments utilize (or avoid) artificial intelligence. Data was collected in October 2025.

About Paragon Legal

Paragon Legal is on a mission to make in-house legal practice a better experience for everyone. We provide legal departments at leading corporations with high-quality, flexible legal talent to help them meet their changing workload demands. At the same time, we offer talented attorneys and other legal professionals a way to practice law outside the traditional career path, empowering them to achieve both their professional and personal goals.

Fair Use Statement

The information in this article may be shared for noncommercial purposes only. When citing or redistributing this content, please provide proper attribution and a link to Paragon Legal.