Inside the Legal Department: How Leaders Are Defining the Boundaries of AI

As artificial intelligence becomes more deeply integrated into legal practice, leaders are increasingly navigating where to draw the line between innovation and integrity. According to a recent survey of 500 legal professionals and business leaders, trust in AI remains cautious, especially when human judgment and accountability are at stake.

The timing of these findings is especially relevant in light of OpenAI’s recent policy change to stop providing legal advice. The move underscores the tension between advancing technology and professional ethics, a balance every legal leader must strike. For in-house teams, the takeaway is clear: AI is a powerful assistant, not a replacement. Legal departments should establish transparent guardrails, prioritize human oversight, and ensure compliance standards evolve as fast as the technology itself.

Key Takeaways

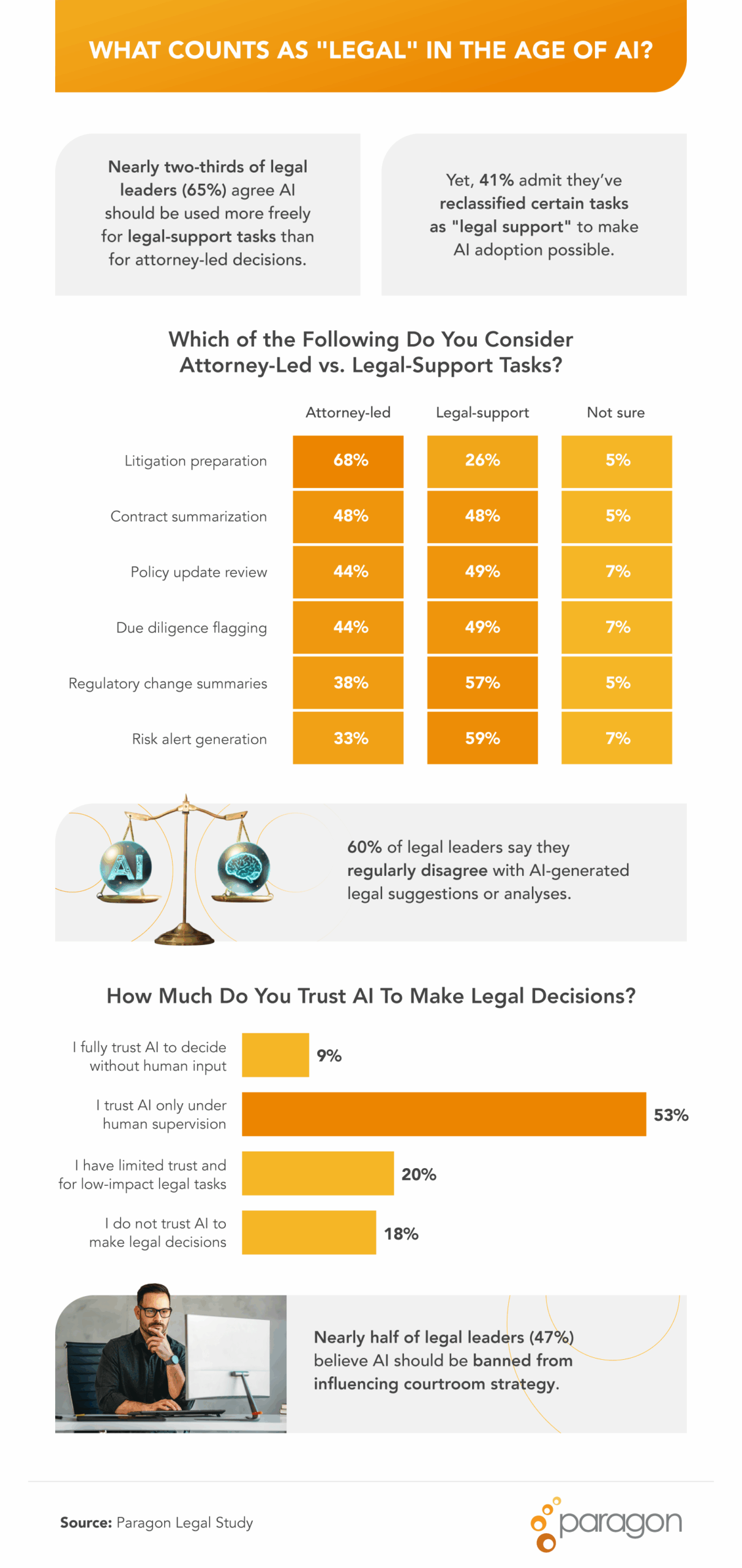

- 53% of legal leaders say they only trust AI when it operates under human supervision, showing that human judgment remains essential in legal decision-making.

- 37% trust AI to contribute to high-stakes legal decisions, indicating limited confidence in AI’s ability to interpret complex issues independently.

- 27% now use AI in their daily work, reflecting how quickly the technology is becoming part of routine legal tasks.

- 39% believe their organizations are adopting AI too quickly, suggesting that implementation may be outpacing governance and training.

- 36% admitted to using AI-generated insights they do not fully trust, highlighting the tension between efficiency and reliability.

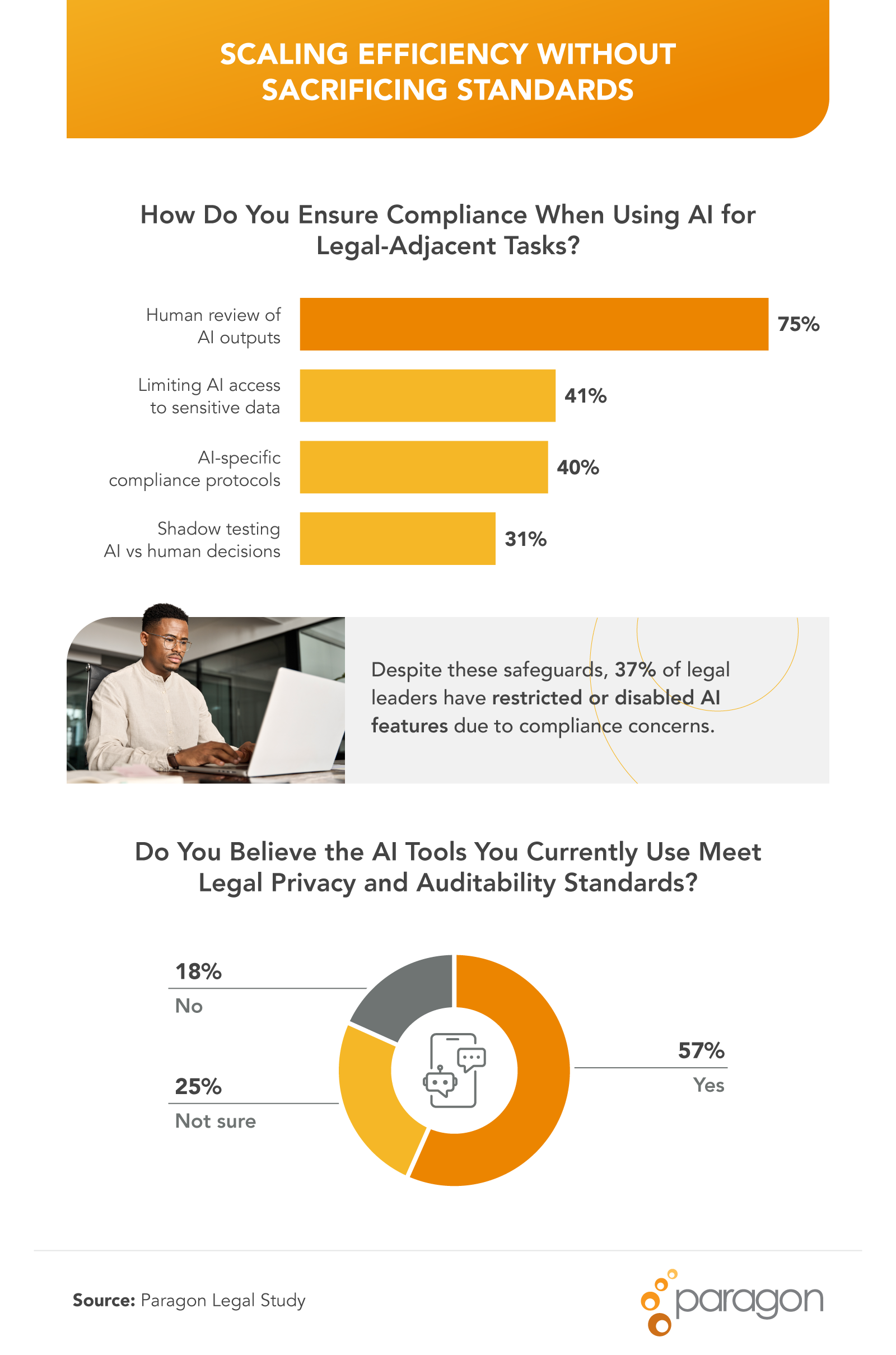

- 37% have restricted or disabled AI tools because of compliance concerns, emphasizing that risk management still drives AI oversight in legal departments.

- Overall, legal leaders appear open to AI’s potential but continue to prioritize caution, accountability, and control as adoption expands.

Where Legal Teams Draw the Line on AI

Legal leaders are split on how far AI should be allowed to influence legal decisions. While many see its value in analysis and efficiency, few are ready to cede interpretive authority.

- Nearly 1 in 3 legal leaders (31%) say they’ve changed a legal opinion based on an AI-generated recommendation.

- 44% believe AI should be allowed to interpret new laws or regulations, while 38% disagree.

- 37% trust AI to contribute to legal decision-making in high-stakes cases.

- 53% say they only trust AI when under human supervision.

- When asked who should be liable if AI contributes to a legal mistake:

- 64% said the company that’s using the AI.

- 60% pointed to the supervising legal professional.

- 30% blamed the software vendor.

- 7% said no one, calling AI mistakes an inherent risk.

- 64% said the company that’s using the AI.

Takeaway for legal leaders: Establish clear accountability frameworks before allowing AI to influence substantive legal decisions. Human sign-off should remain the standard, especially where regulatory interpretation or client impact is involved.

How AI Is Reshaping Legal Workflows

AI has already become a routine tool in many legal departments, but its adoption is uneven and often met with hesitation.

- Legal leaders report using AI in roughly 27% of their daily tasks.

- More than 1 in 3 (37%) have removed AI from a workflow due to quality, ethical, or legal concerns.

- 39% feel that their company is adopting AI in legal workflows too quickly.

- Over one-third (36%) admit to using AI-generated legal insights even when they don’t fully trust the output.

Takeaway for legal leaders: AI should enhance, not rush, workflows. Integrating technology responsibly requires robust training, continuous evaluation of tool performance, and clear policies that support informed human oversight.

How Legal Teams Keep AI in Check

Even as AI becomes a standard part of the legal toolkit, compliance concerns remain top of mind.

- 37% of legal leaders say they’ve restricted or disabled AI features due to compliance concerns.

- 42% have pushed back on AI adoption in legal or compliance work.

Takeaway for legal leaders: Oversight is as much about policy as it is about culture. Legal teams should proactively set boundaries for AI use, defining what’s acceptable, when human review is required, and how results are validated. Building internal “AI ethics checkpoints” helps teams maintain credibility while still leveraging the advantages of technology.

Methodology

A questionnaire was conducted among 500 legal professionals and business leaders to explore how they are integrating artificial intelligence into their legal workflows. Data was collected in October 2025.

About Paragon Legal

Paragon Legal is on a mission to make in-house legal practice a better experience for everyone. We provide legal departments at leading corporations with high-quality, flexible legal talent to help them meet their changing workload demands. At the same time, we offer talented attorneys and other legal professionals a way to practice law outside the traditional career path, empowering them to achieve both their professional and personal goals.

Fair Use Statement

Information in this article may be shared for noncommercial purposes with proper attribution. If you reference or republish any part of this content, please include a link back to Paragon Legal.